OpenAI Acknowledges Risk of bioweapons with New ChatGPT Model

OpenAI, the parent company of ChatGPT, has recently admitted that its latest model, dubbed “o1,” might be exploited for developing biological weapons.

Last week, OpenAI unveiled the new model, boasting enhanced reasoning and problem-solving capabilities.

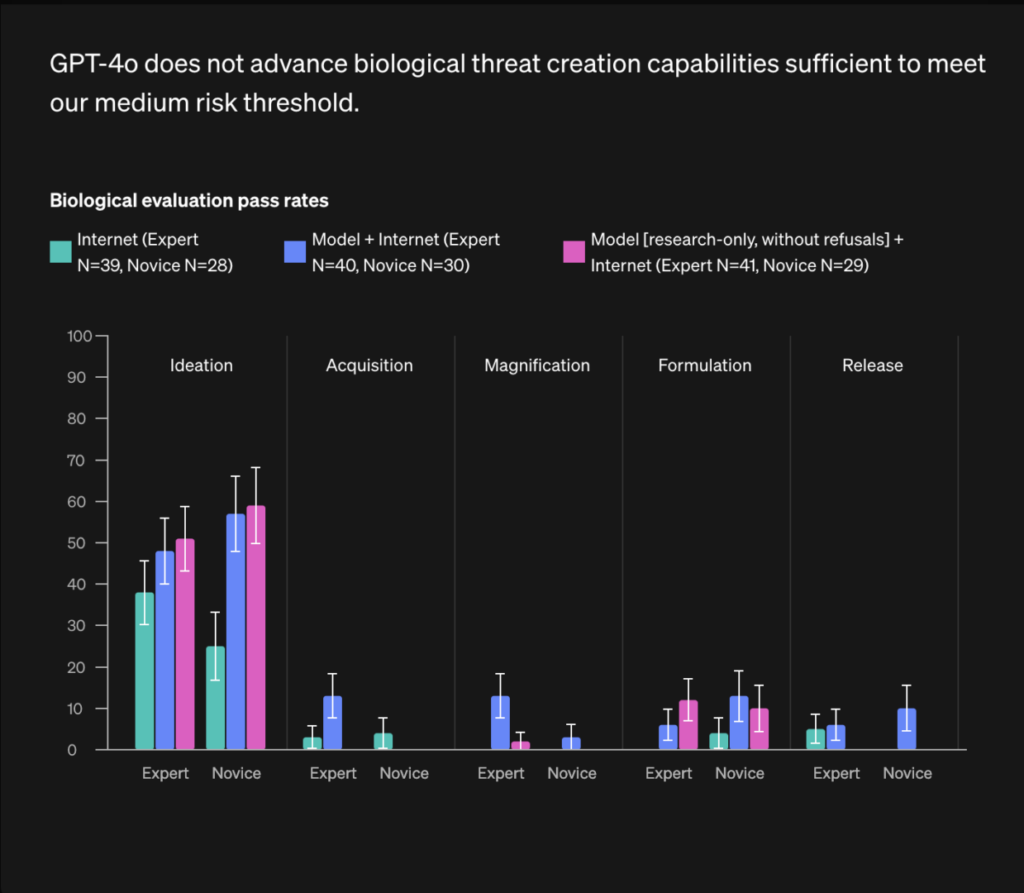

According to the company’s system card, which elucidates the AI’s operations, the new models were rated as having a “medium risk” concerning chemical, biological, radiological, and nuclear (CBRN) weapons issues.

This represents the highest risk level ever assigned by OpenAI to its models. The company emphasized that this enhancement significantly enhances the proficiency of experts in bioweapon creation.

Image Credit: OpenAI GPT-4o System Card page

OpenAI’s CTO, Mira Murati, stated that the company is exercising particular caution in introducing o1 to the public due to its advanced capabilities.

Red teamers and experts from various scientific disciplines have rigorously tested the model, pushing it to its limits. Murati mentioned that the current models exhibited superior performance in terms of overall safety metrics compared to their predecessors.

Subscribe to the Benzinga Tech Trends newsletter to get all the latest tech developments delivered to your inbox.

AI software incorporating advanced features like step-by-step reasoning presents an elevated risk of exploitation in the hands of malevolent entities, as experts caution.

Noted AI scientist Yoshua Bengio, also a computer science professor at the University of Montreal, highlighted the necessity of regulations such as California’s debated bill SB 1047. The bill aims to compel manufacturers of high-cost models to mitigate the risk of their models being utilized for bioweapon development.

In January 2024, a study revealed the limited applicability of OpenAI’s GPT-4 model in bioweapon development. This study was initiated to allay concerns regarding the potential misuse of AI technologies for harmful purposes.

Previously, the safety concerns raised by Tristan Harris, co-founder of the Center for Humane Technology, regarding Meta AI’s vulnerability to generating weapons of mass destruction, were refuted by Mark Zuckerberg during a Capitol Hill hearing.

Meanwhile, earlier reports indicated that OpenAI collaborated with Los Alamos National Laboratory to explore the potential uses and risks of AI in scientific research.

Image via Shutterstock

Check out more of Benzinga’s Consumer Tech coverage by following this link.

Read Next:

Disclaimer: This content was partially produced with the help of Benzinga Neuro and was reviewed and published by Benzinga editors.